- How to install apache spark for scala 2.11.8 on windows software#

- How to install apache spark for scala 2.11.8 on windows code#

- How to install apache spark for scala 2.11.8 on windows download#

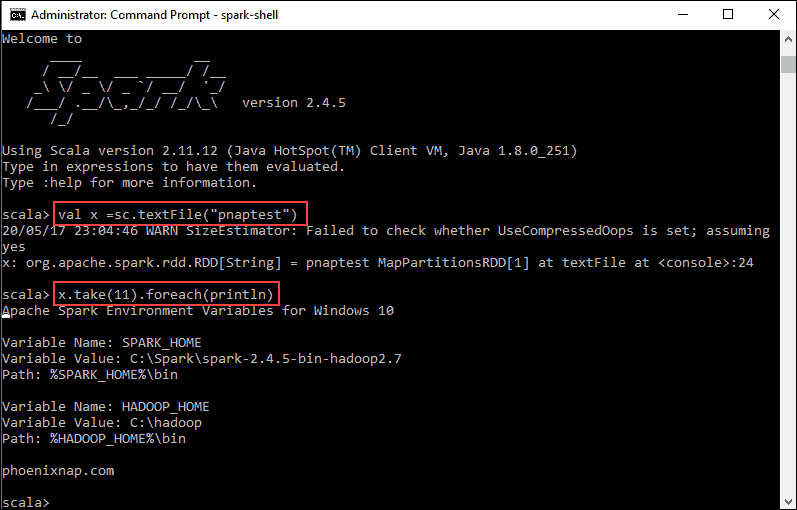

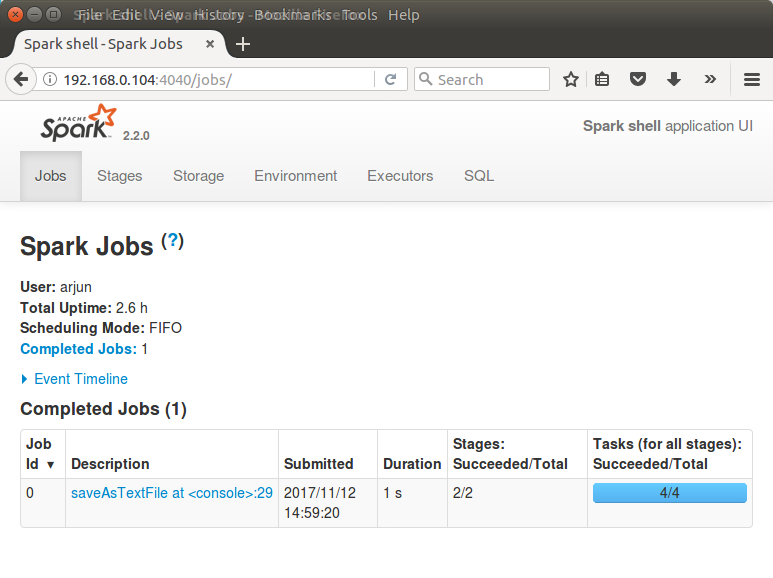

The Spark context will be available as Scala. Using the Scala version 2.10.4 (Java HotSpot™ 64-Bit Server VM, Java 1.7.0_71), type in the expressions to have them evaluated as and when the requirement is raised. Ui acls disabled users with view permissions: Set(hadoop) users with modify permissions: Set(hadoop)ġ5/06/04 15:25:22 INFO HttpServer: Starting HTTP Serverġ5/06/04 15:25:23 INFO Utils: Successfully started service naming 'HTTP class server' on port 43292. Using Spark's default log4j profile: org/apache/spark/log4j-defaults.propertiesġ5/06/04 15:25:22 INFO SecurityManager: Changing view acls to: hadoopġ5/06/04 15:25:22 INFO SecurityManager: Changing modify acls to: hadoopġ5/06/04 15:25:22 INFO SecurityManager: SecurityManager: authentication disabled If Spark is installed successfully, then you will be getting the following output: Spark assembly has been built with Hive, including Datanucleus jars on classpath The following command will open the Spark shell application version: $spark-shell Step 7: Verify the Installation of Spark on your system With this, you have successfully installed Apache Spark on your system.

How to install apache spark for scala 2.11.8 on windows software#

How to install apache spark for scala 2.11.8 on windows download#

Now, you are welcome to the core of this tutorial section on ‘Download Apache Spark.’ Once, you are ready with Java and Scala on your systems, go to Step 5.Īfter finishing with the installation of Java and Scala, now, in this step, you need to download the latest version of Spark by using the following command: spark-1.3.1-bin-hadoop2.6 versionĪfter this, you can find a Spark tar file in the Downloads folder.įollow the below steps for installing Apache Spark.

If you have any more queries related to Spark and Hadoop, kindly refer to our Big Data Hadoop and Spark Community!

How to install apache spark for scala 2.11.8 on windows code#

If your Scala installation is successful, then you will get the following output: Scala code runner version 2.11.6 - Copyright 2002-2013, LAMP/EPFL Now, verify the installation of Scala by checking the version of it.$ export PATH = $PATH:/usr/local/scala/bin Set PATH for Scala using the following command:.Move Scala software files to the directory (/usr/local/scala) using the following commands:.Extract the Scala tar file using the following command:.

You must follow the given steps to install Scala on your system: Want to grasp a detailed knowledge of Hadoop? Read this extensive Spark Tutorial! After downloading, you will be able to find the Scala tar file in the Downloads folder. Here, you will see the scala-2.11.6 version being used. You need to download the latest version of Scala. If you don’t have Scala, then you have to install it on your system. If the Scala application is already installed on your system, you get to see the following response on the screen: Scala code runner version 2.11.6 - Copyright 2002-2013, LAMP/EPFL The following command will verify the version of Scala used in your system: $scala -version Installing the Scala programming language is mandatory before installing Spark as it is important for Spark’s implementation. Step 2: Now, ensure if Scala is installed on your system Learn more about Apache Spark from this Apache Spark Online Course and become an Apache Spark Specialist! You have to install Java if it is not installed on your system.

Java HotSpot(TM) Client VM (build 25.0-b02, mixed mode) Java(TM) SE Runtime Environment (build 1.7.0_71-b13) If Java is already installed on your system, you get to see the following output: java version "1.7.0_71" The following command will verify the version of Java installed on your system: $java -version Step 1: Ensure if Java is installed on your systemīefore installing Spark, Java is a must-have for your system.

0 kommentar(er)

0 kommentar(er)